Digital PR has been a buzzword in the world of online marketing for quite a while now and it’s a popular marketing approach for businesses who are looking to grow their online profile while also landing important links back to their website.

Here at Cedarwood, digital PR has always been an important part of our service offering, not just for the visibility that it garners for our clients, but also for the positive impact it can have on SEO campaigns and as a result we have a lot of experience (over seven years direct experience in fact!) in rolling out effective digital PR campaigns.

Although it is one of the more popular approaches within the SEO mix, digital PR is something which if not done properly can fail to have the desired impact – so below we’ve included some top tips on how you can go about creating an effective digital PR campaign.

The importance of Digital PR

Before we dive into five key tips to creating an effective digital PR campaign, let’s first look at why digital PR is important and how it can help you to achieve your goals across both SEO and also improving overall awareness.

Digital PR is important because it can help businesses achieve a number of goals, including:

➡️ Increase brand awareness: When your brand is featured in high-quality, relevant publications, it can help to raise awareness of your company and its products or services. This can lead to increased traffic to your website, more leads, and ultimately, more sales.

➡️ Generate leads: A well-executed digital PR campaign can also help to generate leads for your business. When journalists and other influencers write about your company, they often include a call to action, such as a link to your website or a way to sign up for your email list. This can help you to capture the contact information of potential customers who are interested in learning more about what you have to offer.

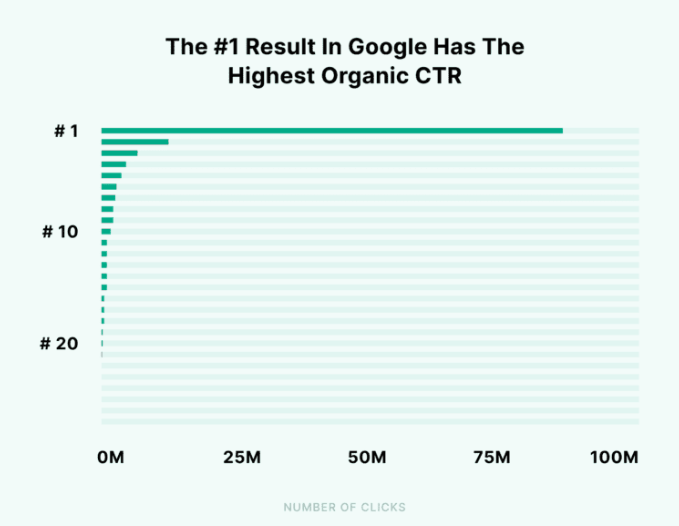

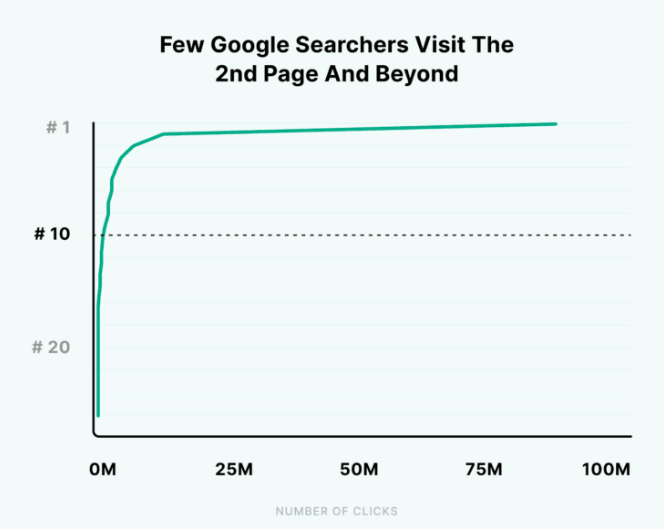

➡️ Improve SEO: When your brand is mentioned in high-quality, relevant publications, it can help to improve your website’s search engine ranking. This is because search engines take into account the number and quality of backlinks to a website when ranking it in search results.

➡️ Build relationships with journalists and influencers: Digital PR can also help you to build relationships with journalists and other influencers in your industry. These relationships can be valuable assets for your business, as they can help you to get your company featured in the media and reach a wider audience.

There’s a lot of value that you can add to your overall marketing mix with digital PR but it’s important to understand how it fits in with a broader marketing mix, so that you can understand how to utilise it effectively.

Five Key Tips

- Start With Your Strategy

Strategy plays a key role within your digital PR campaigns and understanding your client’s audience, the current landscape and the type of content that will resonate with your audience (and journalists!) is important to driving success.

So let’s start off with some key considerations and questions to ask around your digital PR strategy:

➡️ Your target audience: Who are you trying to reach with your digital PR campaign? What are their interests? What are their pain points?

➡️ Your goals: What do you want to achieve with your digital PR campaign? Do you want to increase brand awareness? Generate leads? Improve SEO?

➡️ Your key messages: What are the key messages you want to communicate with your digital PR campaign? What do you want journalists and influencers to take away from your story?

➡️Your content: What type of content will you create for your digital PR campaign? Will you write blog posts? Create infographics? Produce videos?

➡️Your distribution strategy: How will you distribute your content? Will you share it on social media? Submit it to media outlets? Pitch it to influencers?

➡️ Your measurement strategy: How will you measure the success of your digital PR campaign? Will you track website traffic? Leads generated? SEO ranking?

It might seem like a lot, but putting time into understanding your target audience, goals and distribution strategy will save you a lot of time further down the line so invest the time early on to ensure you are driving maximum efficiency through your campaigns.

2. Do You Research

Undertaking research at the start of a digital PR campaign is another way to ensure you save time further down the line, don’t repeat stories which have already been covered and really maximise your outreach capacity and capabilities.

When you’re doing your research for a digital PR campaign, there are a few key things to keep in mind:

➡️ Identify your target audience: Who are you trying to reach with your campaign? What are their interests? What publications do they read? What influencers do they follow?

➡️ Identify the right publications and influencers: Once you know who your target audience is, you can start to identify the right publications and influencers to reach out to. Consider the following factors when making your selection:

➡️ Relevance: The publication or influencer should be relevant to your target audience.

➡️ Reach: The publication or influencer should have a large enough audience to reach your target audience.

➡️ Credibility: The publication or influencer should be credible and respected by your target audience.

➡️Find out what they’ve written about in the past: Take a look at the publications and influencers you’ve identified and see what they’ve written about in the past. This will give you a good idea of their style, their interests, and the types of stories they’re interested in.

➡️ Find out how to contact them: Once you’ve identified the right publications and influencers, you need to find out how to contact them. This may involve finding their email address, phone number, or social media profiles.

By doing your research, you can increase your chances of success with your digital PR campaign.

Here are some additional tips for doing your research:

➡️ Use online tools: There are a number of online tools that can help you with your research, such as Google News, Cision, and Meltwater. These tools can help you to find relevant publications, influencers, and stories.

➡️ Talk to people in your industry: Talk to people in your industry who are familiar with digital PR. They can share their insights and advice with you.

➡️ Attend industry events: Attending industry events is a great way to meet journalists and influencers and learn more about their needs.

Taking additional time to thoroughly research all of the above and build your contact list can really help when it comes to outreaching the campaigns, so make sure you put the groundwork in before you start building the campaigns to maximise the success.

3. Create High Quality Content

If you want journalists to link to your website then you need to generate great content which gives them a reason to link to it. So whether it’s a data piece, a piece of thought leadership or just something of genuine interest to the user, make sure that the content you create is relevant, up to date and most importantly, within the user interest.

Great content for Digital PR comes in a number of different formats and can include:

➡️ Data-driven content: This type of content uses data and statistics to tell a story. It can be very persuasive and can help you establish yourself as a thought leader in your industry. For example, you could create a blog post that analyzes industry trends or a report that provides insights into your target audience.

➡️ Compelling visuals: Images, infographics, and videos can be very effective at engaging your audience and driving traffic to your website. Make sure your visuals are high-quality and relevant to your content.

➡️ Interviews and thought leadership pieces: Interviews with experts in your industry can be a great way to generate backlinks and establish yourself as a thought leader. You could also write thought leadership pieces that share your insights on industry trends or best practices.

➡️ Case studies: Case studies can be a great way to demonstrate the value of your products or services. They can also help you generate leads and build relationships with potential customers.

➡️ Trendjacking: Trendjacking is the practice of capitalizing on current trends to create content that is relevant and timely. This can be a great way to generate buzz for your brand and attract new customers.

Undertaking the first two steps will help you to better understand the audience and it’s important to keep this in mind when creating high quality content to ensure that when you outreach it to journalists it’s going to be relevant to both their audience and yours.

4. Promote Your Content

In the simplest terms promoting your content is essentially outreaching it – getting it in front of journalists to ensure that you get the right level of coverage for your client, at the right time. The promotion of the content is almost as important as the quality of the content if not more so, as it doesn’t matter how great your content is, if no one sees it then it will have no impact on your overall marketing efforts!

When outreaching to journalists the first step is to create an effective media list – remember – you don’t have to include everyone on your media list, rather focus on the contacts that count, people who are likely to cover your story or who have a genuine interest in what you are doing.

Here are some top tips to land coverage with journalists:

➡️ Do your research. Before you reach out to any journalists, take the time to learn about their work and their audience. What kind of stories do they typically write? What are their interests? Once you have a good understanding of their needs, you can tailor your pitch accordingly.

➡️ Make a great first impression. Your subject line is the first thing a journalist will see, so make sure it’s clear, concise, and attention-grabbing. The body of your email should also be well-written and engaging. Get to the point quickly and clearly, and make sure your pitch is relevant to the journalist’s interests.

➡️ Be helpful and responsive. If a journalist is interested in your story, be prepared to provide them with all the information they need. This includes high-quality images, videos, and other supporting materials. Be responsive to their questions and requests, and make sure they have everything they need to move forward with the story.

➡️ Be patient. It takes time to build relationships with journalists. Don’t expect to get a response from every pitch you send out. Just keep pitching good stories, and eventually you’ll start to get results.

You won’t always get it right first time, but taking your time to build out media lists & prepare them effectively will play a key role in ensuring that you are maximising the most of your opportunity.

It’s also important to stand out – in a crowded area where journalists receive hundreds of PR pitches each day, how do you make sure that you stand out from the crowd?

➡️ Personalize your pitches. Don’t just send out a generic email to a list of journalists. Take the time to address each journalist by name and tailor your pitch to their specific interests.

➡️ Offer exclusive content. If you can offer journalists exclusive content, they’ll be more likely to take a look at your pitch. This could be a press release, a white paper, or even an interview with an expert.

➡️Be persistent. If you don’t hear back from a journalist right away, don’t give up. Follow up with them a few days later to see if they have any questions.

Timing is also key – ensuring that you outreach at the right time to the right person plays a key role in getting the coverage that you are looking for.

5. Measure Results

Measuring results and evaluating your digital PR campaigns plays a key role in ensuring that you get the most out of them and that you can take learnings to continue to evolve and improve your offering. Digital PR is constantly changing and evolving, so staying on top of your game is key and ensuring that your clients understand the value of what you are offering plays a key role in ensuring that you’re showcasing the value that you are bringing.

To start with, you need to be clear about what you want to measure, approaches here include:

➡️ Set clear goals: Before you launch your campaign, set clear goals for what you want to achieve. This will help you track your progress and measure your success.

➡️ Use a variety of metrics: Don’t rely on just one metric to measure the impact of your campaign. Use a variety of metrics to get a more complete picture of your results.

➡️Track your results over time: Don’t just measure the impact of your campaign at the end. Track your results over time to see how your campaign is performing.

➡️Make adjustments as needed: If you’re not seeing the results you want, make adjustments to your campaign strategy.

Don’t be afraid to make updates and changes as you need – this will help you to ensure you keep firmly fixed on the overall goal of delivering value to your clients and the reach/coverage that they want.

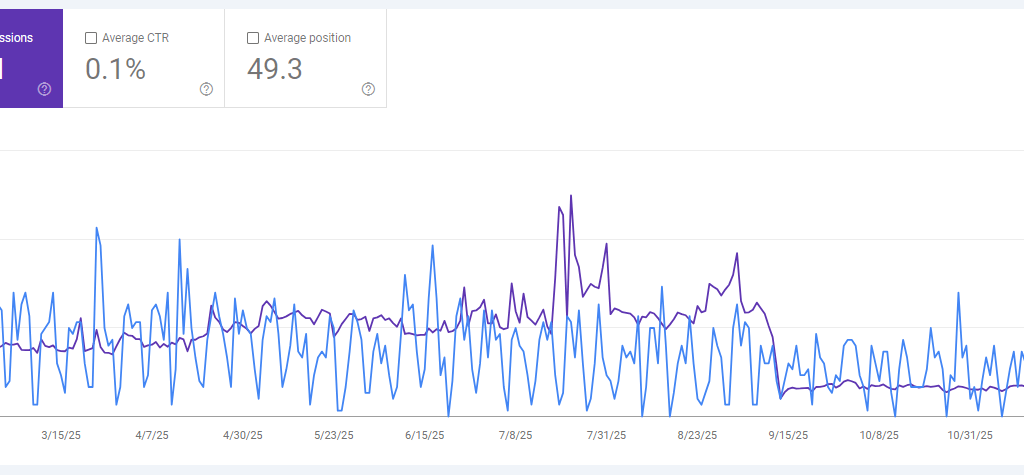

You can use a number of different ways to measure from a metric perspective, but here are some of the most common metrics:

➡️ Media coverage: This is the most basic metric, and it simply measures the number of articles, blog posts, and other pieces of media that mention your brand.

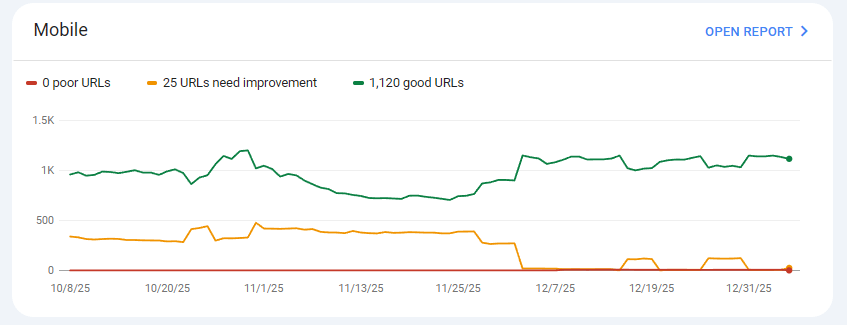

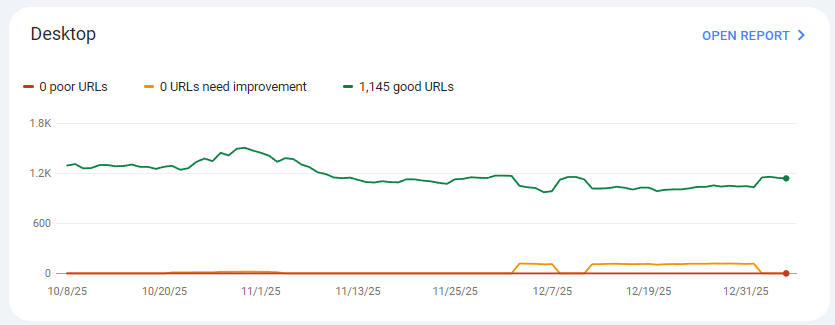

➡️ Link building: This metric measures the number of links to your website from other websites. Links are important for SEO, so this metric can give you an idea of how well your campaign is helping to improve your website’s search engine ranking.

➡️Social media engagement: This metric measures the number of likes, shares, and comments on your social media posts. It’s a good way to measure how well your campaign is resonating with your target audience.

➡️Brand awareness: This metric measures how well people know your brand. You can measure brand awareness through surveys, polls, and social media analytics.

➡️Website traffic: This metric measures the number of people who visit your website. It’s a good way to measure the overall impact of your campaign, as more traffic means more people are learning about your brand.

Whichever you use, it’s important to remember that you always need to be linking it back to revenue and results for your client – these are there key business metrics, so make sure to keep in mind how it closely links back to what your client is looking to achieve.

Final Thoughts

In addition to everything that we have mentioned above, at the end of the day an effective digital PR campaign all comes down to whether the user consumes it, engages with it and feels something for it – after all we are trying to create something which sits within the human interest angle.

Keeping this in mind, additional elements which will play in to the success of your campaigns include:

- The quality of your relationships with journalists

- Your ability to generate buzz and excitement around your content

- Your ability to adapt your strategy as the campaign progresses.

Each of these play their own important role in ensuring that your campaigns get off the ground, so in addition to some great planning and activation make sure you take the time to build the relationships and research the impact of your content – this is essential to gaining that all important coverage!

To find out more about how we can help you with your digital PR campaigns, get in touch!

![[blog]_[: Leveraging Google Ads and SEO for Enhanced Online Visibility]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/07/wpimage-id13321width562pxheightautosizeSluglargelinkDestinationnone-figure-classwp-block-image-size-large-is-resizedimg-srchttpscedarwood.digitalwp-contentuploads202507Image-1-1024x-7-900x506.png)

![[blog]_[How You Can Use Google Search Console Reports For SEO]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/07/wpimage-id13321width562pxheightautosizeSluglargelinkDestinationnone-figure-classwp-block-image-size-large-is-resizedimg-srchttpscedarwood.digitalwp-contentuploads202507Image-1-1024x-81-900x506.png)

![[blog]_[Five Key Tips To Create An Effective Digital PR Campaign]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/06/Untitled-design-25-900x506.png)

![[blog]_[Google Generative AI And What It Means For SEO]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/06/Untitled-design-26-900x506.png)

![[blog]_[Ways To Set Up GA4 Conversion Tracking]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/05/Untitled-design-27-900x506.png)

![[blog]_[Cedarwood Wins Two European Search Awards!]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/05/wpimage-id13321width562pxheightautosizeSluglargelinkDestinationnone-figure-classwp-block-image-size-large-is-resizedimg-srchttpscedarwood.digitalwp-contentuploads202507Image-1-1024x-34-1-900x506.png)

![[blog]_[Five Tips To Improve Your On-Page SEO]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/05/Untitled-design-28-900x506.png)

![[blog]_[ Brighton SEO April 2023: Key Takeaways]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/05/Untitled-design-29-900x506.png)

![[blog]_[SEO - Five Great Features of GA4]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/05/Untitled-design-30-900x506.png)

![[blog]_[BrightonSEO Review]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/05/Untitled-design-31-900x506.png)

![[blog]_[BrightonSEO Review]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/04/Untitled-design-32-900x506.png)

![[blog]_[Brighton SEO Deck: Using Digital PR To Enhance Your E-E-A-T Signals]_[Blog Picture]](https://cedarwood.digital/wp-content/uploads/2023/04/wpimage-id13321width562pxheightautosizeSluglargelinkDestinationnone-figure-classwp-block-image-size-large-is-resizedimg-srchttpscedarwood.digitalwp-contentuploads202507Image-1-1024x-22-1-900x506.png)